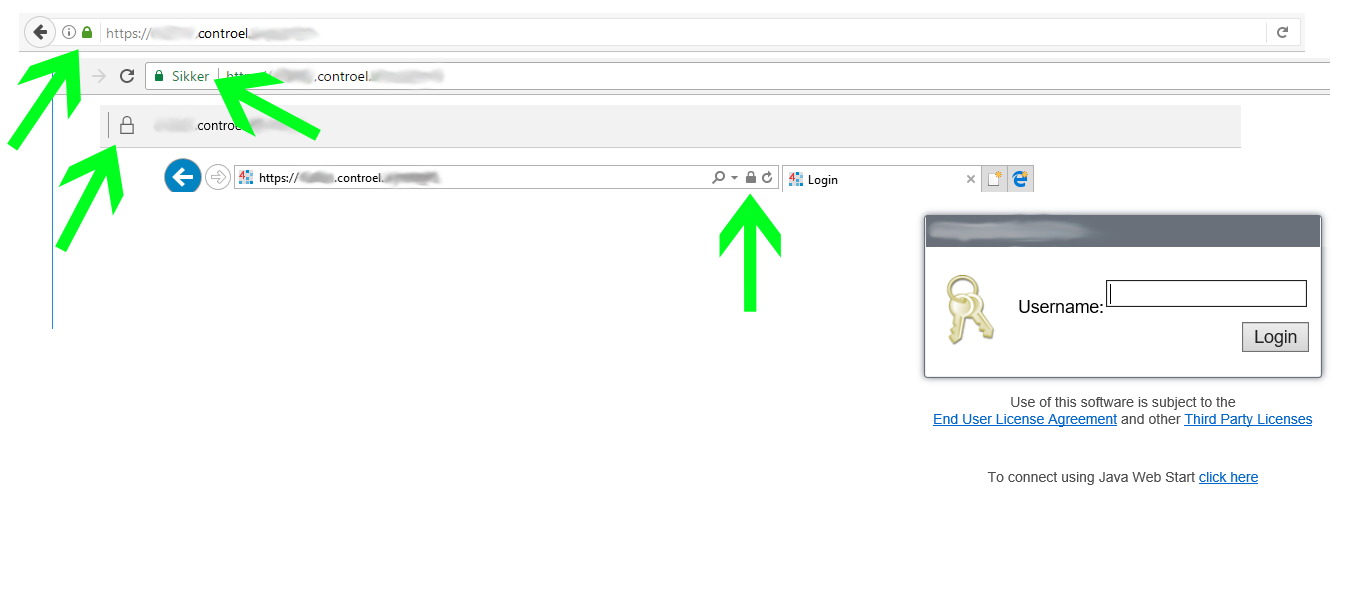

Green padlock, the word “Secure” – and most importantly; no warnings shown to the users, and no need to control their devices.

.

.

TLS/SSL, Encryption and Certificates

Intended Audience

Primarily Tridium System Integrators wanting a hasslefree method for applying TLS/SSL encryption to their Building Management Systems, however the principles and architechture applies to any webbased solution, that is able to serve its content through a secure connection but does not come embedded with a good certificate solution that includes automatic renewals and browser-trusted certificates.

About this article

This – very long – article is made in an attempt to help fellow system integrators and others, for the benefit of more secure Building Management Systems, a higher degree of userfriendliness and less workburden on the system integrators.

I would recommend that you view this article as a webinar. Dedicate the time to actually read and attempt to understand, and I’m confident that you’ll find your time well spent.

The article is divided into sections, that have different background colours, allowing you to conviniently navigate to the appropriate section. In addition, the links below will take you straight to the relevant sections.

- Background Information – Beginners Section

- Background Information – Intermediate Section

- Use-case: Serverbased Supervisor Webserver

- Use-case: Embedded Webserver in Controller (JACE)

- Notes – do read these before you actually implement a solution

I should point out, that the article is provided as-is, with no warrenties. I accept no liability for your use of this article, but make it available to you as a help.

Miscellanious

This article is intended to provide you with an understanding of how certificates work, and a proposed general architechture, you may employ to improve upon your existing solution. The article therefore is not a specific step-by-step guide, and generally speaking I feel that one should not simply follow such a guide without any understanding of the process that is behind the steps.

I will however be open to creating a step by step guide for this setup, if there should be a popular demand for this. For now – get comfortable and have a good read.

BEGINNERS SECTION

Understanding the Actual Problem

IT processes information. That’s it. Nothing more to it. If you remember that basic principle, it will help your understanding alot.

Let’s look at why this is a problem. IT processes information without thought, reason or the application of logic. IT will happily (try to) process information that will kill it. Unlike nature, there is no evolution to take care of this problem. The only tool at our disposal, then, is to apply more information to the process, about what things the IT process should stay clear off.

If people eats poisonous berries and fall ill or die, we (collectively) learn that these berries kill us. We can’t put a wrapper on all the berries though, to say “DO NOT EAT” because we don’t control where the berries appear. Instead, we put information in notes, on websites, in books – and eventually where it makes a difference; in our brain. “Don’t eat the berries that look like that, they can kill you.”

In exactly the same way, we can’t rely on the “bad content” to wrap itself in “bad content wrappers”. Instead, we need to add information to our IT systems, that tells it that it should not process the bad content. So we do. Extensively. This is simple for content that is exclusively bad, but for content that can be both good and bad, it’s not that simple. We can’t just filter out all webpages, for example – there are good ones as well as bad ones.

Since the rule “content is a website” clearly is not sophisticated enough, we need something more advanced.

Trust

IT systems need to be able to trust in varying degrees. Most developers and IT security professionels will frown at this statement because it easily becomes overinterpreted as ‘blind trust’. But really – it is the only principle that is managable in practice, to ensure users security and privacy without halting progress altogether. It is the more advanced mechanism, that we rely on, when we can’t rely on simple, principal conditions any longer.

Again, it’s not so different from humans. Remember the berries from before; if you have not personally fallen ill or passed away from eating those berries, then the only way you would know not to eat them is by received information. On the other hand, if you are stranded in a deserted place and those berries are your only food source, wrongfully thinking that they are unsafe would lead to starvation. So the information – much like our website in this context – can be good or bad. Friendly or malicious. And the way you determine, which information will keep you alive? You choose the source you trust most.

TSL/SSL, encryptions and certificates work of the same basis. When a browser throws a warning to say that the site is untrusted, or when it indicates to the user that the content is secure, it is following the same principles; determining if it can consider this content trusted or not.

The risks of blindfolding

We’ve established that we cannot qualify or disqualify a website, solely based on the fact that it is a website. There are good and bad ones out there, so we need to somehow determine, which we trust.

We could trust everyone. A blindfold. This is obviously a risky strategy that will drastically shorten the life span of all our IT equipment. We could rely on massive databases of what is good and bad, but those require maintenance and pose a very unflexible solution; how do I get my website added? How would the database know I am one of the good guys? And how would it know, if one of the good guys took a break, and a bad guy filled the spot? It’s clearly not the solution.

Instead, we’ll need to establish some criterias that decide if we trust the content or not. And preferably, we want to be able to know if we can trust the content before actually opening it. That means, that some sensible criterias could be…

- We only trust content that hasn’t been changed from when it left the sender and till it arrives at our “doorstep”.

- We only trust content where we know the sender, or someone we trust tells us that the sender is trustworthy.

- We only trust content, that hasn’t been opened by others.

Simple, right? 1 and 3 are basically the existential reason for the postal service, and the second criteria seems like a reasonable precaution, if what was inside the envelope could kill you. In addition to that, if the content had been opened by others (3), we would risk that the others would react to the content faster than we could. If that was your lottery ticket in the mail, and someone else already cashed the prize, you’d see the problem quite clearly.

How to achieve trust in IT?

The first and last criteria go hand in hand in IT. Since data doesn’t show evident signs of neither reading nor tampering with, our only option is to make the content obscure. This prevents both unauthorized reading, and prevents (intelligent) change of the content. Since computers are essentially fast calculators, encryption is a logical move; we apply various calculations to the content and only share the formular with the intended recipient(s). This way, everyone else reads weird and uncontectual utterances like “sTAn25JraNW” and the intended recipiet with the formular – or “key” – reads “Hello, world!”

This mechanism effectively addresses every criteria we set forward earlier – except the second one about knowing the sender. But why is this relevant, the data is encrypted; nice and secure, right? Well, a virus is also data, so you could encrypt one of those as well. Not to mention that your favorite webshop www.buystuff.com may have taken every precaution to encrypt and secure your payment information – but they can’t effectively prevent you from navigating to www.buystuf.com and enter your payment information there.

Because of this problem, we need the sender to identify themselves. Now, my name isn’t George, but had I not said that – I could introduce myself as George and you would believe me. So not only do we need the sender to identify themselves – we also need some verification (certification), that the identification is indeed true. This is where certificates and the various types of verification comes in – and this is also where we step up the difficulty a bit, so please continue in the intermediate section.

INTERMEDIATE

Identity and Certification in IT

Like your name, devices on a network are named by domain names. Much like your birth certificate certifies that you are you, a certificate in IT certifices to a lesser or greater extend, that the device is on the domain it claims. Therefore, four levels of certification are made:

- Unverified or “self-signed” does nothing to verify anything – more on this below.

- DV – Domain Verification verifies that the device is on the domain it claims to be.

- OV – Organisation Verification verifies that the registered owner of the domain does in fact own the domain.

- EV – Extended Verification verifies that the registered owner of the domain is an active entity in existance.

These verifications all mean nothing, if we cannot rely on them to be honest and accurate. For this reason, there are requirements in place to regulate the actual verification process. More on this (large) topic may be found at cabforum.org. Companies who perform these verifications are called Certificate Authorities or CA for short.

The technical process is identical when a Certificate Authority signs a certificate and when you sign a certificate yourself. The difference is, that browsers will trust (recognized) CAs, while they will not trust you. The difference in the end result is whether users are warned that the content may be unsafe and the connection is not to be trusted, or that everything shows up in a secure and trustworthy manner.

Organisation and Extended Verifications are both handles by CAs, and are as such above the scope of this article. If you’d need such a certificate (for example if you process payment information in a webshop), likely you would contact a CA, and likely they would assist you as needed. Instead, we’ll focus on the more “DIY-friendly” Domain Verification, since this is sufficient to achieve our goal of providing a secure, userfriendly and warning-free user experience.

We need to get a few more concepts nailed down first though;

Self-Signed Certificates

A self-signed certificate can be generated by anyone in less than a minute, so it serves no purpose in terms of verifying anything. It does however enable encryption, so it’s absolutely better than nothing. This is why we use it in e.g. JACE-to-Supervisor communication, or for communication between JACEs.

One major caveat about this type is, that (logically) browsers and other applications do not trust a self-signed certificate. This results in warnings for the user, and in some cases a partly unusable product (for example when utilizing a JAVA application, that will issue warnings every couple of minutes) or even an entirely useless product (for example in the event that browsers will simply remove the possibility of excemptions from SSL rules in the future).

The self-signed certificates are appropriate for the usecase, where you control both/all devices in a fairly closed system. You wish to enable encryption on the network level to enhance security, and you’re able to instruct all relevant devices, that they must trust in this certificate you made. Not practical for a webpanel with different users, but for Building Management Controllers that communicate with a predefined set of other devices – sure.

Chain of Trust

The browser reads your certificate, but more importantly who signed your certificate. This would typically be something called an intermediate certificate. The intermediate certificate is signed by a root certificate, and browsers keep a list of the root certificates that they trust. Root certificates are typically stored within certificate authorities, and never see the light of day. In the day-to-day operation of the certificate authority, they will instead use their intermediate certificates to sign the certificates that they verify.

This architechture ensures, that the root certificates are not exposed to misuse, and that in the event of even large compromises “only” intermediate certificates would be affected – in other words, the content would fail in large quantities, but not the system itself. It’s also practical because it leaves browsers with a relatively lightweight task of keeping some hundred root certificates in their trust store; the list of certificates that the trust. As opposed to storing millions of end-entity certificates.

Whether the browser shows a green padlock or throws a warning, then, pretty much boils down to, whether the signature that signed your certificate can be traced back to a signature that the browser is happy to trust. This is also why one way of solving these issues is to add your self-signed certificate to the browsers trust stores. This, however, is both impractical and risky, as it’s very time consuming and requires control over end-user devices, as well as leaves your installation vunerable to browsers deprecating the option for the user to ignore certificate related errors all together.

These are also pretty much the reason why all the “hack” solutions, like adding self-signed certificates to internet browsers truststores or instructing users to make exemptions are not ideal. They are essentially violating the idea behind TLS and certificates all together, and with this – one needs to understand – comes the risk that the hack solutions someday stop working, because security policies in browsers, operating systems, etc. are tightened to only allow the correct implementations.

It is also the reason you cannot just get a signed CA to sign all your self-signed certificates to upload into JACEs all over the place; you then become a CA and need to adhere to the baseline requirements. Sure, your intentions are only the best, but the system cannot rely on this to be the case – you may as well be signing www.all-the-malware-you-can-eat.com. It certainly would make your life easier, but it would also reduce the security value of verified certificates to a point where we may as well be without them.

Certificate Expiration and how to fight it

Another concept that I see a lot of questions on, is the concept that certificates expire. It’s essential, because it’s the main obstacle in simply applying verified certificates to a BMS controller “at birth” or at commissioning – you’d need to go back and renew the certificates quite frequently.

So – why do certificates expire?

It’s really inconvinient, right? Certificates – just like your passport, drivers license, etc. – expire to prevent misuse and thus increase trust in the system. Your passport expire so that in the event that it is stolen from you, it cannot be used forever. The main difference is that certificates have a much shorter lifespan, usually as short as 90 days, but the reasoning is the same.

How do we fight this?

Really, there is only one rational way, if you consider the time consumed on administering this manually; it has to be automatic. It’s the only way to ensure it will not be forgotten or delayed. Fortunately, some certificates support automatic renewal by simply repeating the verification process. More on that a bit later, when we dive into how to actually build a practical solution in the next section.

YOU’LL LIKELY APPRECIATE THESE GEMS

The first gem

TLS (formerly SSL) is applied to the transport of data. This is also why the newer acronym is more clear; Transport Layer Security vs. Secure Socket Layer (old). So, the TLS applies to the transport of data from the client to the server. It doesn’t apply to the content that the server fetches, so long as the content does not raise other flags, of course, like serving HTTP content inside an HTTPS container, which is obviously considered a security risk.

As the certificates are a component in the TLS mechanism, they are also working point-to-point. That means that you’re able to serve one certificate outbound (the one the user sees) and use another certificate inbound (that e.g. your connection from supervisor to JACE would use).

A vital point to understand then, is that you only need a signed, trusted certificate to the first point-of-contact that the user reaches, when contacting your system.

The second gem

A network device is reached (providing access is allowed) by a domain name or a host name. The host name is the name of the actual device and could be an IP address like 1.2.3.4. It could also be a more human-friendly alias, like mailserver. If you call your device mailserver, you’d need something to translate that name to the IP address. That something could be a hosts file on your local operating system, or it could be a DNS – Domain Name Server. They both generally contain entries that in this example could look like this:

127.0.0.1 localhost

1.2.3.4 mailserver

4.3.2.1 company.com

3.4.1.2 support.company.com

This action of performing this translation is called “resolving”, and the result is that the users query “hits” the device/server, that has the IP address listed in the register. Just as the IP address is used to point to the device, the device itself has a series of “addresses” that services can use for communication – ports. These are ranged from 0…65.536 and exist in two sets; TCP and UDP. So every IP address/device could have numerous ports/services running on it.

Two of these ports are always implied; port 80 is reserved for http, and 443 for https. So when you type https://whatever.com in a browser, you are actually requestion whatever.com:443, even though it is not displayed. But “the rule” is that for any query, including the ones made through a browser, you can specify any valid port, though some are reserved for specific purposes. It’s also worth knowing, that ports inbound and outbound can be configurated independently from one another – more on that later. Each port can have only one binding at any given time, which is to say that only one service may use a port at a time.

This means, that you can setup one server on one IP address to host several systems, by calling different external ports, like 8080, 8081, etc.

This also means, that you can move external traffic to a different port, as it enters the server, e.g. port 443 externally may become port 8443 internally. This is particularily useful because a service needs a port binding to communicate, but also because only one binding may exist for each port.

BMS USE-CASE: SERVERBASED WEBSERVER (Supervisor)

What we hope to achieve:

We would like to serve a user interface from a Niagara Supervisor, and would like to use TLS to provide encryption on the connection from the user to the server. No matter how the user requests the server, TLS/SSL is used.

We want the certificates to renew automatically, so that we don’t have to manage it constantly. We also want no excemptions or other users actions that require instruction or control over the client machines.

I will base the examples herein on a Linux Cent OS 7.0 environment, as Tridium Niagara (finally) supports Linux RHEL, and the certificate process is easier to set up in a Linux environment. You may need to change certain commands if you use a different Linux, but in that case chances are you already know which.

A good place to start is to set up the domain name you would like to use. You can also use the IP address of the server, but it’s slightly bad practice as it will for example be difficult for you to move the environment to a new server, or to cope in case of e.g. cancellation of your server, as you can’t be certain you’ll get the same IP again – in fact it’s unlikely you will. Before you decide on a final domain name (DNS record), see the notes section at the end.

I’ll assume that you have installed the Tridium Niagara Supervisor software, which has the JettyWeb webserver embedded in the application. You should verify/adjust so that the JettyWeb webserver binds to ports 8080 and 8443 respectively, rather than 80 and 443 for HTTP and HTTPS. After this, the webserver should be restarted (Right click JettyWeb Webserver in the Property Sheet and select Action -> Restart.

Setting up an additional webserver

What you’ll want to do now, is move the handling of the incoming client connection from the embedded webserver to a webserver that you control. This step is essentially necesary, because the embedded webserver doesn’t allow us to finely control certificates – it is setup for light weight. Since this additional webserver will move the connection, we will refer to it as a proxy server – but keep in mind that it’s just software, we’re not installing more physical servers.

I recommend the free NGINX webserver, as it’s well suited for this scenario and will be a very efficient solution.

Acquiring a verified certificate

Let’s Encrypt provide Domain Verified certificates for free, and the process is fully automated, very fast and supports scripted renewals! Like with many open source systems, they are originally developed for Linux and then Windows support is more of an afterthought. For this reason, there are no official Windows clients, and while the system works convincingly on Linux, I personally haven’t managed to make it function properly on Windows Server.

The DNS A record, that you made earlier, is key to getting a certificate with Let’s Encrypt. It works by having the local application on your server write a file, and then read the content of that file by quering the domain you specified over the internet. Only if there is a match, will Let’s Encrypt be able to fetch the file, and then a certificate is issued.

So achieve this, we will need to allow outside traffic to reach the file – otherwise Let’s Encrypt won’t be able to find it. We’d like to make sure though, that only those (harmless) files can actually be accessed there.

sudo vi /etc/nginx/default.d/letsencrypt-known.conf

# creates a configuration file in a directory where the NGINX webserver will look for instructions

# in this configuration file, add the following line [note; i to insert (edit), ESC to leave edit, :q! is quit without save, :wq! is save-quit] location ~/.well-known {allow all;}

After this, we reload the NGINX server (and you ensure that your firewall allows traffic on port 80 and 443 to reach the same ports internally). Note, that if your server does not support IPv6, you’ll have to comment that out of the /etc/nginx/nginx.conf file. After restarting NGINX, we will install the Let’s Encrypt client and ask for a Domain Verificated Certificate:

sudo systemctl restart nginx

sudo yum install bc -y

sudo git clone https://github.com/letsencrypt/letsencrypt /opt/letsencrypt

sudo /opt/letsencrypt/./letsencrypt-auto certonly -a webroot –webroot-path=/usr/share/nginx/html -d your.domain.com

If you set everything up properly, this will output a congratulatory successmessage. If not, you’ll need to troubleshoot. There are detailed, step by step guides for setting up NGINX and Let’s Encrypt on the internet.

Once you’ve acquired the certificate, a good idea is to setup automatic renewal so that you won’t forget it later. This is typically best achieved with a scheduled task.

sudo crontab -e

# inside the file, we want the following lines:

30 2 * * 1 /opt/letsencrypt/letsencrypt-auto renew >> /var/log/le-renew.log

35 2 * * 1 /usr/bin/systemctl reload nginx

# save the file and exit out

This will run the automatic renewal process every 30 days (certificate is valid for 90 days) and log the results. Every 35 days it will reload the webserver, to ensure it uses the updated certificate.

We need a cipher for our TLS setup as well – the certificate is only half of the solution. A cipher is much easier to create, though:

sudo openssl dhparam -out /etc/ssl/certs/dhparam.pem 2048

# note, that you can use 4096 for increased security, but it is considered very overkill and will take a very long time (45-60 minutes to complete)

# also note, that the output is a series of ….+… signs on the screen. This is the generation process of the random cipher. It will take a few minutes.

Only two tasks remain now; telling the proxy webserver to use the certificate created and tell the proxy webserver where to point the traffic.

Applying the verified domain and redirecting traffic

To apply the certificate, you need to tell the proxy webserver that you want it to use it. In fact, this is the whole point of having the proxy webserver. At the same time, you’ll want to setup the port proxying so that traffic on the relevant external ports will be forwarded to the ports corresponding to the embedded webserver. Both of these tasks are achieved through a configuration file, so let’s make one:

sudo vi /etc/nginx/conf.d/your-configurationname.conf

# good practice for this application would be to name the file for the domain name it serves. Like sitename.yourcompany.com.

Again, the editor is opened, and we will need to insert something like the following (at minimum correct the 3 instances of “your.domain-name-here.com”, but in general be sure to review the configuration in its entirety and ensure that it suits your expectations:

server {

listen 80;

server_name your.domain-name-here.com;

return 301 https://$server_name$request_uri; # redirect all to SSL}

server {

listen 443 ssl;

location / { proxy_pass https://localhost:8443; } # pass through to Niagarassl_certificate /etc/letsencrypt/live/your.domain-name-here.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/your.domain-name-here.com/privkey.pem;ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_prefer_server_ciphers on;

ssl_dhparam /etc/ssl/certs/dhparam.pem;

ssl_ciphers ‘ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA’;

ssl_session_timeout 1d;

ssl_session_cache shared:SSL:50m;

ssl_stapling on;

ssl_stapling_verify on;

add_header Strict-Transport-Security max-age=15768000;

}

Notice how we are also redirecting port 80 traffic? This is a big thing for our user friendliness, because port 80 is what is queried when we ask for a domain, since the port is implied as HTTP, where port 443 is implied for HTTPS. That means, that when we write www.controel.dk we are asking on port 80. If you omit this part, your users must either query www.controel.dk:443 or https://www.controel.dk to reach the same domain. With this redirect in place, the user can simply type the URL and everything just works.

End Result

People connecting to the server will be placed in the https:// schema and pointed to the login screen of Niagara. The webserver in Niagara will take care of the actual content. The URL line will show a green padlock and the original URL, there will be no browser warnings, and from this point onwards you can (almost) forget about the actual server itself, and simply concentrate on your Niagara installation.

Your users will experience the same as is shown on the screenshot below.

Niagara instance secured with Domain Verification Certificate, as appears in (from top left) Mozilla Firefox, Google Chrome, Microsoft Edge, Microsoft Explorer 11.

BMS USE-CASE: Embedded Webserver in Controller (JACE)

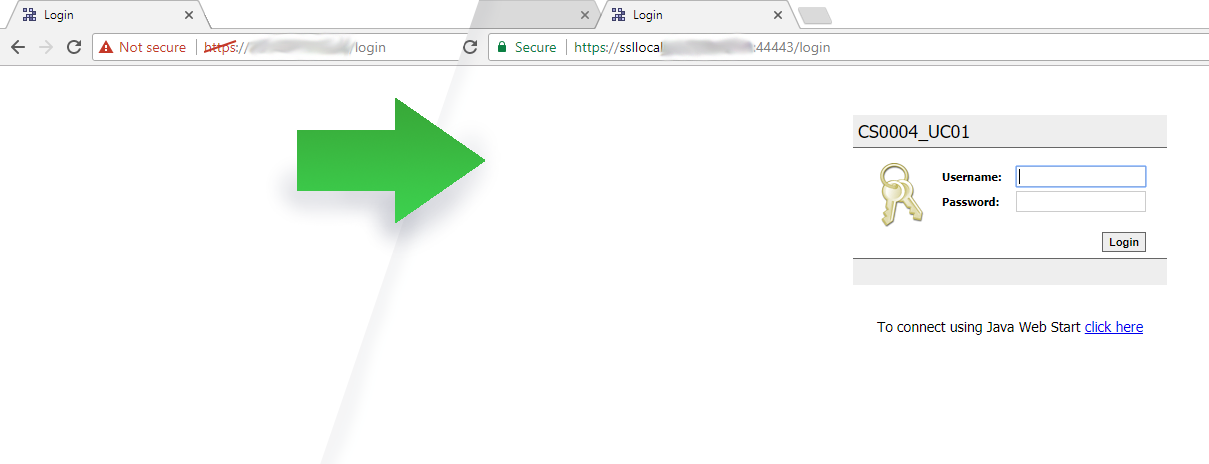

Even if using a local JACE, you can still ensure that your users will have a pleasant experience logging in, and that your inbox will be free of questions about how your client changed PC and now needs “that thing you did last time to fix the browser”:

Left: Accessing a JACE (self-signed certificate) directly. Right: Accessing same JACE (unchanged) through a proxy server.

The third gem

In the case of a server hosted user interface, we set up a proxy webserver to serve our verified certificate to the user and to achieve the liberty to handle the certificate and connection under more flexible circumstances that those in the embedded webserver. That architechture is not limited to the same device or even network.

Using port-proxies you may direct the traffic to any ressource you want. Obviously, you still have to adhere to best practices to create a secure installation and avoid warnings in the browser – and obviously the destination must be accessible from the proxy server. But you can point the traffic on to an entirely different device, and that is what you’ll utilize now.

Therefore, the process is pretty much exactly the same as above – only with the changes that the Supervisor isn’t installed on the same server, and that there are a few things to do when connecting to a different network.

Prerequisites

Since we rely on a proxy server placed remotely, we will need access from the internet to the JACE, and the site needs a static (not changing) IP address.

In any case, you’ll probably need to involve the clients IT staff, as you’ll need the traffic on (any) two available ports to not only be accepted but also directed to the LAN IP of the JACE. Since the JACE needs to communicate bidirectionally, and no local proxy server is involved, it’s sensible to set up the remote proxy (your server in the cloud) to forward to a port, that is then identical to the port allowed through the network, and identical to the port setup in the JACE embedded webserver (Jetty Webserver) under HTTPS port. This should eliminate gotchas like traffic not being properly directed between ports.

You also should prepare a document for your client to store, that shows how to connect to the JACE (using the domain, you’ve made available to them), but also detailing how they can connect to the JACE locally in the event of a failure in the proxy setup; so the JACEs LAN IP, any exceptions they may need to allow in their browser, etc. This document should be on hand, so that in the event of an internet failure or a malfunction in your setup, the client is still able to access the system without delay.

Finally, you really should always – but particularily in this case – setup your JACE to only accept encrypted connections, both in foxs, platformssl and https, and the IT staff at the client site need to understand, that they should only forward the specific ports to your JACE, and that it should not be commonly used ports.

How will it work?

In the day-to-day operation, your client will connect to the domain (DNS A record) you made. That could be for instance site.your-domain.com. On that address, the proxy webserver will direct the client into the HTTPS scheme, applying the domain verified certificate. It will then continue the users connection to the WAN IP of the client site, where a port forward (or equivelant) ensures that the query is forwarded to the JACE on the port on which the embedded Jetty Webserver in the JACE listens. The client experiences no warnings, and the connection is secure and will be indicated as such by the browser.

In the event of a failure – maybe you forget to pay the bill for the server rent, maybe the clients IT staff forget why the port forward is in place and remove it or maybe the internet connection at the site is unstable – the client is able to connect directly to the LAN IP of the JACE like always. In this event the client will see warnings about your self-signed certificate, but will (for now, atleast) be able to add an exemption in their browser to access the JACE directly.

Note that this works with most embedded webservers and clients, but is not a universal “fix”. For example – Niagara AX runs in HX profiles, but not through JAVA/WebStart. Since most instances that will be following this guide will be new deployments using HTML5hxProfiles in Niagara 4, this shouldn’t present an issue and hasn’t warrented actual attention from me. I very much assume that the issue could be solved simply by forwarding the additional port(s) used for the chosen authentification scheme in Niagara, when serving the content through Px/JAVA. It is likely that this would require NAT/masquerade on the proxy webserver, but honestly I haven’t bothered looking into it.

Notes

Should controllers be allowed internet access?

Most manufactors will argue, that controllers should simply not be connected to the internet. This is derrived from the basic – and correct – perspective, that it is impossible to build lasting security. Locks from the 1800s are laughable today, however effective they may have been at the time. Similarily, we must also accept, that we do not have the full picture; we do not know if encryption used today is as unbreakable as we think, we don’t know if agencies around the world have backdoors in encryption algoritms which may also be exploited by attackers, we simply cannot afford the arrogance of thinking that it cannot go wrong.

However, we must also accept that there are factors weighing on the other side of the scales. At the time of writing this, we are halfway through 2017. Most clients today will expect to be able to access their (non-vital) systems from everywhere, and certainly they will want you to be able to troubleshoot in minutes rather than hours. With the nature of things connected to the internet today (powerplants, traffic lights, …) we should be able to connect a Building Management System to the internet without seeing the end of the world.

How does this affect the security of the installation?

If we propose that the controller will be connected to the internet, this guide will only serve as an improvement in terms of security versus simply just internet connecting it to begin with. This is because we suddenly have a flexible and secure platform as a checkpoint. We are able to set up safeguards that a controller would never be able to – as you should. When I figure these solutions, I always put DDoS mechanisms in place – sure, a botnet and experienced hackers may be able to beat it, but still it is miles better than the JACE embedded webserver itself.

Compare it to a train line between an international airport and the city center. The two are connected no matter what, but if we add a platform in the middle, where all passengers must disembark and pass along and through metal detectors, trained dogs, body scanners and guards, the risks to the city center are over all reduced. Similarly, we can subject the traffic to the BMS to various checks, to increase the overall security of the remote access.

Risks

DNS Brute Force

Brute force describes trying possible solutions one-by-one until succesfull or prevented. You probably have heard the term relating to guessing passwords, as this attack is the primary reason why passwords should consists of several characters. The same attack form can be applied to DNS records. If for example company.com is the target for such an attack, it may be that a.company.com, b.company.com …. zzzzzzzzzzzzzzzzzzzzzzzz.company.com are systematically tried.

By this mechanism, it is possible to map which subdomains a given domain uses. DNS entries are not secrets. But they exist on a virtual level – you can’t actually connect them to a psysical location.

Because of this, there are a few precautions that would be relevant to consider:

- Consider employing a different top level domain for your customer site solution. If your company is called companya.com online, consider *.hostedcompanya.com to separate customer sites from attacks. Sophisticated and manual attacks may still discover them, but probably scripted attacks will not see them.

- With key tags, never write the name or address of the psysical location that the key unlocks. With DNS entries, never link them to a psysical location either, it only makes ransomware attacks that much easier. Ideal would be random characters similar to passwords, but those are not userfriendly (fAth26naT7hg2ui.companyahosting.com). Second best would be assigning a random number to each site, that is managable, e.g. site49284.companyahosting.com. Also acceptable would be an incremental number, for example 12.companyahosting.com, however the disadvantage is that your clients will easily be able to check if there are other clients that they can “test” (also by accident). This risk is acceptable though, considering that all they will get is a login screen, where strong password policies should always be enforced, and default user credentials be removed.

- Remember that the above also applies to any information that is publicly visible. The name of a station in Niagara is shown on the login screen, so it should probably follow the same guidelines as the DNS entries, instead of “Baltimore_City_Hall”.

Hardening

Please be aware, that this article – and any step-by-step guides or similar that may follow – deal with a defined aspect of IT security and is by no means a complete guide. The hardening of IT systems is a complicated science, that should always be specifically adapted to the specific scenario.

Examples of very sensible things to keep in mind, that are not otherwise covered in this article are DDoS attack mitigation, package drop policies, brute force policies, rootkit scanning, firewall and port policies, proper file system permissions across distributed user accounts, hardening of external management and connection utilites, and so much more.

Har indlægget vakt din interesse?

…du er velkommen til at kontakte os.